The terms validity and reliability in research are used to judge the efficacy of research. They show how well something is measured by a test, technique or method. Validity is concerned with a measure’s precision, while reliability is concerned with its persistence.

What is validity

Validity and reliability go together in psychology or another social research. To answer the question, what does valid mean? We can simply state that the amount to which a measure accurately characterizes the basic concept that it is meant to be measured is referred to as validity or construct validity. Is a measure of compassion, for example, truly assessing compassion but not a separate construct like empathy? Validity may be measured using theoretical or experiential methods, and it is best to use both.

Scores are supposed to represent what they are meant to when a measure has excellent test-retest reliability and internal consistency. However, there must be something more since a metric could be highly reliable, but without validity.

Consider someone who believes that the size of people’s forefinger represents their self-worth and hence assesses the said attribute by placing a scale up to their forefinger. While this measure is exceptionally good in terms of test-retest reliability, it is totally invalid. The fact that the forefinger of one individual is one cm longer than the finger of another does nothing to give you greater appreciation of yourself.

Types of validity

Validity theory focuses on how well to transfer or reflect the notion of a theoretical structure into an effective measure. This kind of validity is referred to as translational validity and it consists of the following two subcategories:

- Content validity

- Face validity

A panel of expert judges generally assesses translational validity by rating each item (indicator) on how well it fits the conceptualization of that concept.

Content validity

The amount to which a measure “covers” the concept of interest is referred to as content validity. If a researcher conceptually defines test anxiety as both sympathetic activation of the nervous system, which leads to jumpy emotions, and also of negative thoughts, then, his test of anxiety must contain antsy feelings as well as negative ideas. For example, consider that attitudes are often characterized as a combination of thoughts, feelings, and behaviors directed toward a certain object. According to this theoretical framework, A person has an optimistic response toward exercising if he or she develops positive attitude towards exercising, feels good about that physical activity, and actively does it.

Face validity

It refers to how well a measuring method seems to measure the construct of interest “on the face.” The majority of individuals would anticipate a questionnaire about self worth to contain questions regarding whether they believe they are a valuable person with good traits. A questionnaire including such questions would therefore be of excellent face validity.

On the contrary, the self-esteem finger-length measurement method does not seem to be related to self-esteem and hence has minimal face validity. Although face validity may be measured quantitatively, for example, by having a large group of people score a measure in terms of whether it seems to measure what it is supposed to measure. It is harder to ascertain face validity qualitatively.

A measure of people’s attitudes about exercise would have to represent all three of these elements to have excellent content validity. Content validity, like face validity, is rarely measured statistically. Instead, it is evaluated by closely comparing the measuring technique to the construct’s conceptual description.

Based on empirical observations, empirical evaluation of validity analyses how well a particular measure corresponds to one or more external criterion. This type of validity is called criterion-related validity and it consists of four sub-types:

-

Convergent validity and Discriminant validity

Convergent validity refers to the proximity of a measure to a concept that it is meant to measure, and to the extent that a measure is not measuring (or discriminating) against other concepts that it is not supposed to measure is called discriminant validity. In general, given a collection of associated constructs, the convergent validity and discriminating validity are evaluated together.

-

Concurrent validity

It analyses how effectively a measure connects to other tangible criteria that are supposed to take place at the same time

-

Predictive validity

The extent to which a measure correctly projects a theoretically anticipated potential result.

Where the translational validity analyses if a measure reflects its basic reality, criterion validity investigates whether any measure, given the theory of that construct, is behaving as it should.

What is reliability

What does reliable mean? Simply, the degree to which a construct’s measure is consistent or reliable is referred to as reliability. To put it another way, if this scale is being used to assess the same thing several times, would we get essentially the exact same result each time, provided the core phenomenon does not change?

People guessing your weight is an example of an erroneous measurement. Most likely, the various measurements will be incompatible, and so the “guessing” measuring technique will be generally unreliable. A weight scale is a more dependable measurement because you are most likely to get the same result every time you check your weight unless you have gained or reduced between measurements.

Note that reliability does not involve precision but consistency. If the weight machine is tempered with, for example, to shave twenty lbs. off the genuine weight just so one feels good, the weight it will show will not be the true weight and thus, it will not be a reliable metric. Despite this, the improperly calibrated weighing scale will always give you the same weight, which is twenty lbs. less than the actual weight, indicating that the measure is reliable.

Following suggestions can be considered to come up with a reasonable way of getting reliable measures. If, like for many social science researchers, the measurement involves requesting information for others, it can be done

- By making sure the use of data collection techniques that are more sensitive to subject matter (e.g. questionnaires) with those which rely more on researchers’ subjectivity (e.g. observations).

- By asking only those questions or queries about which respondents may be knowledgeable.

- By avoiding vague terms in the measure, for example, by explicitly demonstrating whether you are looking for yearly wage.

- By simplifying the choice of words in the indexes to make sure some participants do not misunderstand them.

Validity and reliability of measurement:

Types of reliability

The aforementioned tactics can help to increase the accuracy of our measurements, but they do not guarantee that they will be totally accurate. Reliability testing of measuring devices is still required. There are other methods for measuring reliability, which are discussed below.

- Inter-rated reliability

- Test-retest reliability

- Split-half reliability

- Internal consistency reliability

Inter-rated reliability

Inter-rater reliability, also known as inter-observer reliability, refers to the consistency of multiple independent evaluators (observers) of the same concept. This is usually examined in a pilot study and depending on the degree of measurement of the construct, it can be done in two ways. When the measure is categorized, a set of every category is defined. Raters verify to which category each observation should belong, and an estimate of inter-rater reliability is the % agreement between the raters. For example, if two raters rate Hundred observations into one of three distinct categories and their rating match for 75percent of the data, then the inter-rater reliability will be 0.75.

Furthermore, if you wanted to assess undergraduate students’ social abilities, you might videotape them interacting with a fellow student for the first time. Then have two, or maybe more, observers view the films and assess each student’s social competence level. Different observers’ assessments should be strongly linked with each other to the degree that every person has a unique level of social skills that are recognizable by an attentive and keen observer. In Bandura’s Bobo doll research, inter-rater dependability would have been assessed as well. In this scenario it should have been significantly linked with observer ratings as to how many violent acts a specific kid performed when playing with the Bobo doll.

Test-retest reliability

Test-retest reliability is determined by administering the measure to a set of individuals once, then administering it to the same set of individuals again after a certain amount of time and comparing the two patterns from the data for test-retest correlation.

When scientists assess a concept that is believed to be relatively stable over time, the results they receive are supposed to be stable over time as well. Intelligence, for example, is widely assumed to remain constant throughout time. An individual who is exceptionally smart today will continue to be exceptionally smart in a couple of months. This indicates that any decent test of intellect for this person should yield about the very same results in coming weeks as it does now. Clearly, a measure that yields extremely variable results over time cannot be a reliable measure of a consistent concept.

The statistic is reliable if the data do not differ significantly between the two tests. The test-retest reliability is calculated by comparing the observations from the two tests. It is important to note that the period between the two exams is crucial. The larger the time interval, the more likely the two observations will vary over that time (owing to random error), and the worse the test-retest reliability will be.

Split-half reliability

Split-half reliability is simply defined as a consistency measure between two halves of a very same concept of construct. For example, if you have a ten-item measure of a structure, divide it into two groups of five items at random. If the sum total of concepts adds up to odd numbers, unequal halves are also permitted.

After that, give a random sample of responders the full test. Calculate each respondent’s overall score for each half, then use the correlation between the overall score in each split to determine split-half reliability. But since random mistakes are decreased as more information is added, the lengthier the instrument, the more likely the two sides of the measure would be identical, and therefore this approach tends to overstate the reliability of longer instruments.

Internal consistency reliability

It refers to the consistency of people’s replies across numerous questions on a multiple-item scale. All items relating to such measures should generally be consistent with the same underlying structure, in order to ensure correlations between the results of those items. People who think that they are a person of value on the Rosenberg Self-Esteem Scale should believe that they do have a lot of positive traits. If the responses of individuals to the different things are not correlated, it wouldn’t be useful to say that they all measure the same concept. This applies equally to physiological and behavioral measures as it does to self-report measures.

For instance, in a virtual roulette game, players could make a number of bets to gauge their risk taking. Individual participants’ bets would be constantly high or low throughout trials, therefore this measure would be internally consistent with the reality.

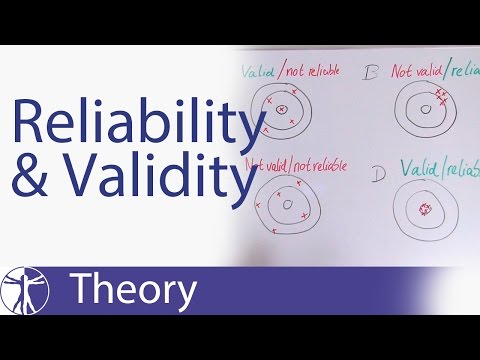

Difference between validity and reliability

While explaining validity and reliability, it can be stated that the actual and basic difference between validity and reliability lies in definition. Validity refers to how well you’re measuring what you’re meant to be measuring, or, to put it another way, how accurate your measurement is. On the other hand, the extent to which an instrument measures the exact way every time it is being used under the same settings with the very same participants is measured by reliability.

In other words, validity and reliability are two critical measurements tests. The fraction of systematic variation in the instrument can be used to assess the instrument’s reliability. The validity of an instrument, on the other hand, is determined by evaluating how much variance in observed scale scores reflects true variation among people being examined.

| Reliability | Validity | |

| Definition | When repeated measurements are taken, reliability refers to the degree to which the scale delivers consistent findings. | Validity refers to how well a research instrument assesses what it’s supposed to assess |

| Measuring instrument | An instrument does not have to be valid to be reliable. | An instrument is only valid if its reliable. |

| Concerned with | Consistency | Accuracy |

| Value | Relatively low | High |

| Assessment | Simple | Problematic |

Making sure your research is valid and reliable

Given the enormous number of inaccurate scientific research, it’s essential to effectively to tell which ones are definitive and reliable. When feasible, reliable studies involve use of adequate sample sizes, random samples, minimize biases and are done by researchers not motivated by money or the intrinsic motive of achieving certain outcomes.

1. The size of samples

To obtain a conclusion, the complete relevant population should be researched in the ideal scenario. However, it is nearly difficult and highly costly to survey or conduct a research with an entire population. Thus, the sample is employed, the data evaluated, and the results are extrapolated to and derived for the research population.

To produce accurate results and high statistical strength, it is vital to have an appropriate sample – the ability to distinguish the difference between the groups in question where there is a genuine difference. False negatives and conflicting findings might be more likely from low sample size. On the other hand, it is not advised that a sample be too big since it can be unmanageable, and it is a waste of time and resources when a smaller sample provides an appropriate result.

2. Randomized selection of participants

In order to ensure the validity of research, randomization is essential. Randomized clinical trials usually assign randomly selected groups of people to either get a therapy or receive a placebo (or no treatment). Before the trials begin, the participants in each group are chosen at random using a software or random clustering algorithm to guarantee that there is no systematic bias in either group.

3. Bias in research

The three usual types of bias in research studies are

- Measurement bias

Bias of measurement implies errors in the collection of relevant information.

This might be caused by leading questions that prefer one response over another, or it can be caused by social desirability and the fact that most people want to show themselves in a positive light, thus they will not react honestly.

- Selection bias

Selection bias happens if a sample is deliberately excluded of certain groups of individuals or samples are picked based on convenience.

Selection bias may also emerge when a research compares a treatment and control group that are conceptually distinct. If a research has selection bias, the results and conclusions are likely to be influenced.

- Intervention bias

Intervention bias arises when variations exist in the way an intervention has been treated between two groups and when there is variances in the way subjects have been exposed to the interest factor.

Factors affecting the test validity

A test must include following factors to be considered valid and reliable.

- Uncertainty about the direction

- Test items with an inappropriate level of difficulty

- Test items that aren’t relevant to the outcomes being assessed

- Inadequately prepared exam items

- Difficulty in reading vocabulary and sentence structures

- Uncertainty

- The test’s duration

Conclusion

The goal of establishing validity and reliability in research is to guarantee that the data is accurate and reproducible, as well as that the results are correct. Validity and reliability evidence are required to ensure a measuring instrument’s integrity and quality.